Data Processing Secrets Every Business Needs to Know

Data processing techniques can be used in a wide range of situations, including real-time and batch processing. However, there is a false divide between batch and streaming processing, making it harder for organisations to improve the quality and availability of information. Streaming technology can improve the cost, quality, and availability of data by combining batch and streaming processing, allowing businesses to make better decisions and predict costs in real time. Organisations should use a unified data architecture and combining batch and streaming processing to improve data quality, cost, and access to information. They should also have skilled workers and security measures in place. Evaluate data infrastructure, define data processing needs, implement unified data architecture, invest in employee training, and ensure data security and compliance to maximise benefits of data processing.

Data processing methods are now an important part of how businesses work. They involve gathering information, analysing it, and using it to make more educated choices. People often think, however, that data processing techniques can only be used in certain situations. For example, a lot of businesses think that real-time data processing is only needed in certain situations. So, they miss the chance to improve the quality and availability of information across the whole business.

In reality, data processing techniques can be used in a wide range of situations, including both real-time processing and processing in batches. One of the most important things about data processing is that it gives businesses a more complete picture of how they run. By processing data in real time or very close to real time, businesses can learn things about their operations that they might not have noticed otherwise. They can look at their data and find patterns, trends, and oddities that help them make better decisions.

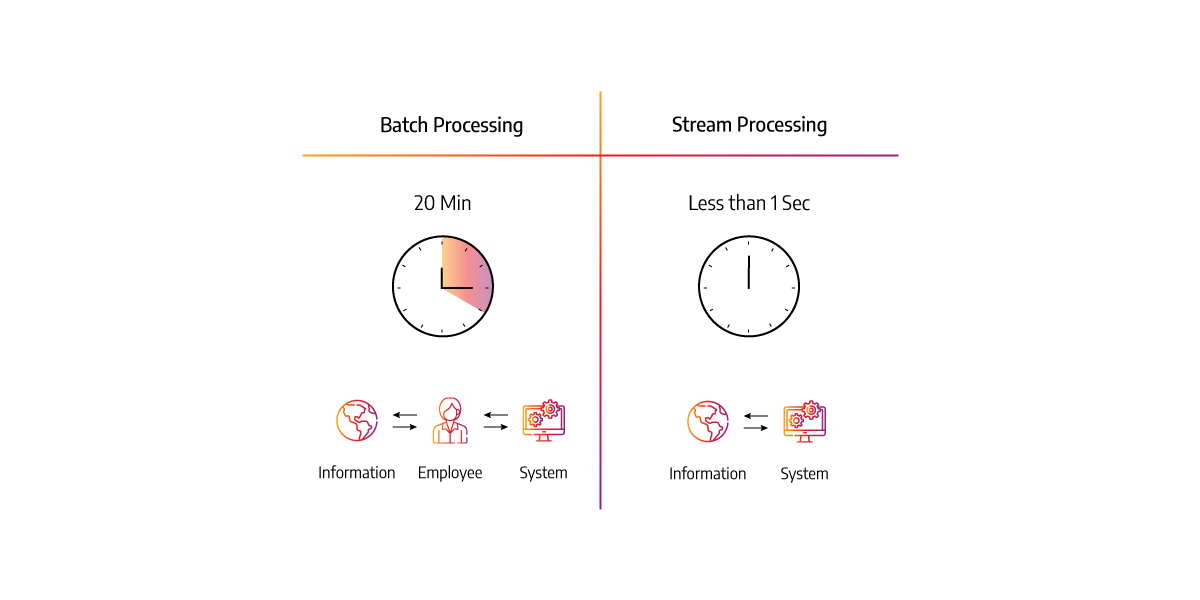

But there is a false divide between batch processing and streaming processing, which can make it hard for organisations to get the most out of data processing. In the past, data teams would ask their business for a list of data applications and set the desired data speed for each one. Conventions in data warehousing that go back decades say that data that doesn’t need to be real-time should be loaded in batches every night or at most once an hour. Then, data architects save streaming technology for specialised real-time applications that need latency times of less than a minute.

This is a common way to make a choice, but it creates a false divide between batch processing and streaming processing. This separation can make it harder for data-driven teams to improve the quality and availability of information across their business. Instead, organisations should make streaming a key part of their data infrastructure, whether or not they use “real-time” data.

There are many ways that streaming technology can improve the cost, quality, and availability of data. Let’s say a health insurance company lets call them HealthX wants to keep track of how many claims are being paid out each day. At the moment, they only get batch data once a day, which isn’t enough to keep them up to date. But they don’t want to switch to streaming because they think it can only be used for things that happen in real time.

With a unified data architecture that combines batch and streaming processing, tHealthX can make a scheduling framework that fits their end-to-end latency needs. They can make a list of all the data sources that are available, what they are like, and how often they are updated. By starting with the end application and the final value, they can work backwards to figure out the end-to-end latency needs of the business.

This framework gives business stakeholders the ability to “turn the knob” on how fresh, expensive, and good the data is. For example, they could change how often the data is updated so that they could keep track of claims being processed every hour or almost in real time. This would help them make better decisions, find problems in their workflow for processing claims, and fix them quickly.

Also, data architectures that combine stream processing with batch processing can lower the costs of both maintenance and computing. They use less computing power than big batches, and the cost should go up in a straight line with the amount of data. Also, they can make it easier to predict costs by making cost spikes and bottlenecks clear in real time.

Recent improvements in streaming technology, such as the simplification and unification of streaming with existing batch-based APIs and storage formats, make it possible for organisations to use the reliability of streaming tools even when they don’t need seconds or milliseconds of latency. For example Spark made a single API that works for both batch and streaming. Other tools, such as ksqlDB and Apache Flink, also support a simple SQL API for streaming.

It is important for organisations to realise that data processing techniques can be used in many different situations, and the false dichotomy between batch and streaming processing can limit the benefits of data processing. Instead, a unified data architecture can improve data quality, cost, and access to information by combining batch and streaming processing. By making a list of all available data sources, defining scheduling rules based on the business’s end-to-end latency needs, and adjusting the knob for data freshness, organisations can make better decisions, be more flexible, and save money.

But it is important to make sure that the data processing methods fit the needs of the organisation. Using a technology just because it’s cool might not give you the benefits you want. So, organisations need to understand the use cases, figure out how up-to-date the data is, and choose the right technologies for their needs.

Also, it’s important to have skilled workers who know how to use data processing technologies well. Organisations should put money into training their workers so they can learn the skills they need to run the systems. Also, they should make sure that their data is safe and that the systems follow all laws and rules about data protection.

Conclusion

Data processing techniques have the potential to help organisations in a lot of ways, but they need to be used correctly. By using a unified data architecture and combining batch and streaming processing, organisations can improve the quality of their data, lower their costs, and make it easier for people to get information. Data processing techniques can help companies learn more about their operations and make better decisions if they have the right people on staff and security measures in place.

And if you’re non-technical here are 5 tips to take to your data teams today to get a healthy cost effective conversation stared.

- Evaluate your current data infrastructure: Take a close look at your current data infrastructure and identify areas where you can integrate streaming technology. Consider where real-time or near-real-time data processing could provide valuable insights to your business.

- Define your data processing needs: Determine the level of data freshness your business requires for different use cases. This will help you determine the right balance of batch and streaming processing for your needs.

- Implement a unified data architecture: Consider adopting a unified data architecture that combines both batch and streaming processing. This will allow you to process data in real-time or near-real-time, providing you with valuable insights into your operations.

- Invest in employee training: Ensure that your employees have the necessary skills to manage and operate the data processing systems effectively. Investing in employee training can help maximise the benefits of data processing for your business.

- Ensure data security and compliance: Make sure that your data is secure and that the systems you use comply with relevant data protection laws and regulations. Data protection is crucial, and failure to comply can result in significant legal and financial consequences.

If you’re looking to maximise the benefits of data processing for your business, considering adopting a unified data architecture that combines both batch and streaming processing or are looking for validation of an existing data processing strategy, our data architects here at Covelent are on hand to answer any questions you may have.

Related Insights

View all

2024 AI In The Workforce Survey: Insights on Generative Artificial Intelligence and Perceptions and Expectations Among Professionals

UK workers have a complex relationship with generative AI and it's only getting more complex.

Five essential data best practices for data-informed businesses

Businesses must adopt five essential data best practices to ensure data quality, compliance, and security to drive performance and growth. Adopting five essential data best practices can help businesses effectively manage and capitalise on their data assets, driving performance, growth, and customer satisfaction.

Five Imperatives for Executive Boards Considering Investment in Generative AI

As generative AI technologies evolve, executive boards face the imperative of making astute investment decisions to unlock value and ensure sustainable growth. This article outlines five critical considerations: establishing a business case, leveraging data ecosystems, navigating ethical and regulatory landscapes, addressing talent needs, and ensuring scalability and future-readiness. Each imperative is crucial for maximising return on investment and achieving strategic alignment.